Imagine this situation:

You have completed a Data Engineering course. Maybe you even finished a Master’s in Data Engineering. You have watched YouTube videos, taken notes, and done a few small projects. Now you are ready to apply for jobs. But one big question is still in your mind:

- “What do I really need to know to get hired as a Data Engineer?”

- “There are so many tools and technologies… which ones actually matter?”

If you feel this confusion, you are not alone.

Most students who want to become data engineers feel exactly the same.

The good news: you do not need to know everything.

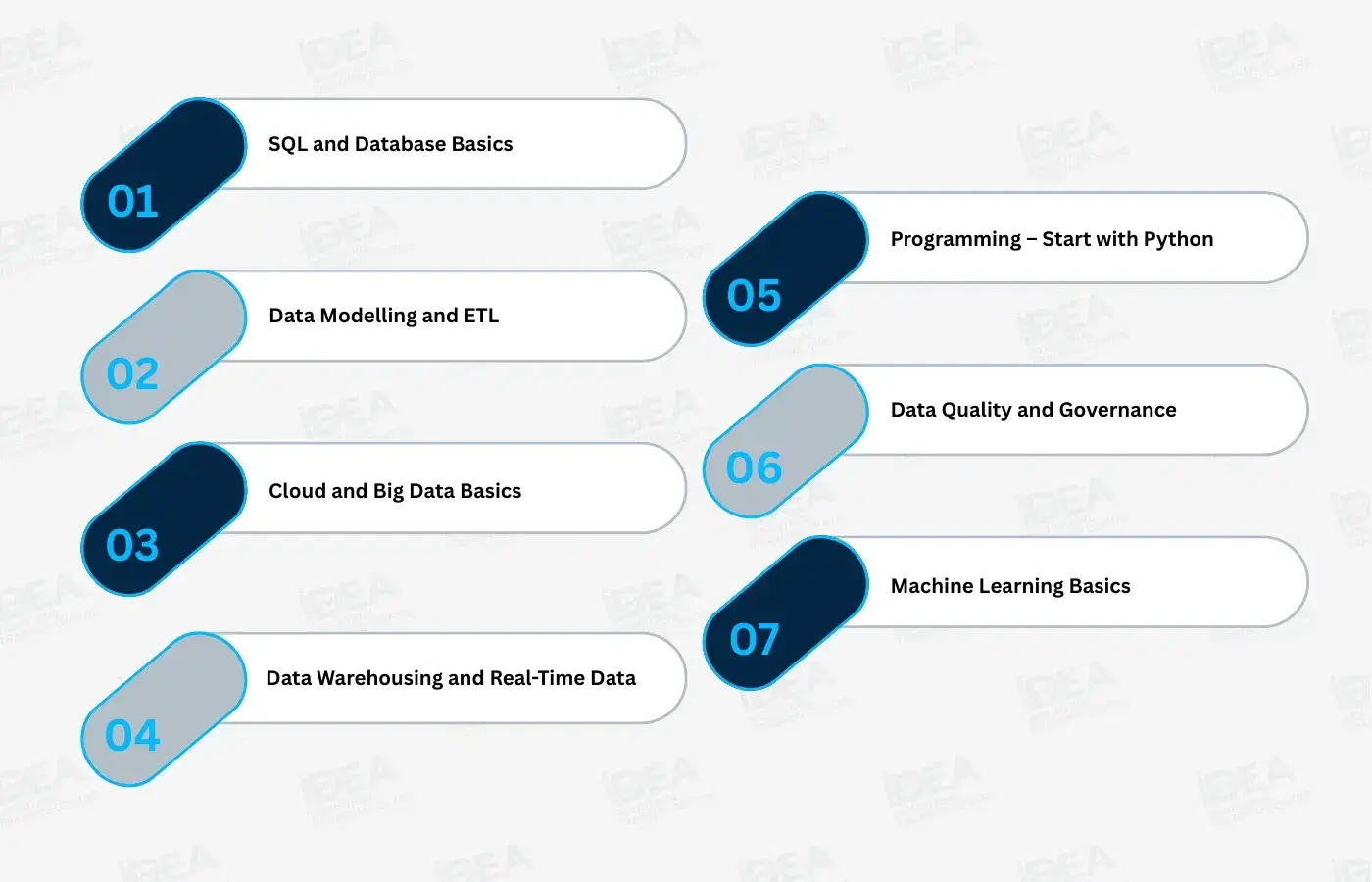

In this blog, we will walk through 7 key skills that most companies expect from a junior data engineer in 2026.

You can use this as a simple roadmap and build these skills step by step.

1. SQL and Database Basics – The Language of Data

The first and most important skill for a data engineer is SQL.

You can think of SQL as the language you use to talk to databases. Wherever data is stored – in banks, e-commerce companies, hospitals, apps – SQL is almost always used.

With SQL, you can:

- Find data

Example: “Show me all orders from last month.” - Filter data

Example: “Show only customers from one city or one product.” - Join tables

Example: Combine customer, order, and payment tables to see the full picture.

You will also hear about two types of databases:

- Relational databases – like MySQL, PostgreSQL (data in rows and columns)

- NoSQL databases – like MongoDB (more flexible structure)

As a student, focus on these SQL basics first:

- SELECT, WHERE, ORDER BY

- JOIN (very important)

- GROUP BY with simple functions like COUNT and SUM

If you are comfortable writing these types of queries, you already have a strong foundation for data engineering.

2. Data Modelling and ETL – Giving Data a Clear Shape

In real companies, data does not come in a clean, ready-made format.

Imagine a shopping website:

- Customer details are stored in one place

- Orders in another

- Payments in another

- Returns somewhere else

Data modelling means deciding how to arrange all of this data so that it is easy to understand and use.

Connected to this is ETL, which stands for:

- Extract – Taking data from different sources (files, APIs, old systems)

- Transform – Cleaning the data, fixing formats, removing errors

- Load – Storing the clean data into a final system (like a data warehouse or data lake)

In simple words:

ETL is the process of turning raw data into useful, clean data.

As a student, you can practice by:

- Taking a messy Excel or CSV file

- Cleaning it (removing duplicates, correcting formats)

- Saving the cleaned version into another file or a small database

This is a small version of what data engineers do on a larger scale in real companies.

3. Cloud and Big Data Basics – Beyond Your Laptop

Modern companies deal with huge amounts of data.

A single laptop or local system is not enough to store and process everything.

That is why most companies use the cloud.

Some popular cloud platforms are:

- Amazon Web Services (AWS)

- Microsoft Azure

- Google Cloud Platform (GCP)

As a beginner, you do not need to master all of them.

But you should understand basic ideas like:

- Storing data in the cloud

- Running jobs or scripts in the cloud

- Using cloud services instead of only local files

You may also hear the term Big Data and tools like:

- Hadoop

- Spark

You can think of it like this:

Cloud = where your data and systems live

Big Data tools = tools that help you work with very large datasets

As a student, a good starting point is:

- Use a free tier of AWS or GCP

- Learn how to upload a file

- Try running a small job or simple query

This basic experience is enough to talk about cloud and big data in a fresher interview.

4. Data Warehousing and Real-Time Data – Past and Present Together

A data warehouse is like a well-organised library for data.

In a data warehouse:

- Old and recent data is stored in a clean, structured format

- Most reports, dashboards, and analytics are built from here

Some common data warehouse tools are: Snowflake, Amazon Redshift, Google BigQuery (you do not need to know all of them deeply at the start).

On the other side, there is real-time data.

Real-time data means data that is processed immediately as it arrives, not hours or days later.

Examples:

- Live stock prices

- Live food delivery tracking

- Fraud detection at the time of payment

Tools like Kafka or Flink are often used for real-time processing.

As a student, focus on understanding concepts first:

- Data warehouse = one central place where clean data is stored for analysis

- Real-time data = data that is processed as soon as it is generated

You can always learn tools later. First, make sure the ideas are clear.

5. Programming – Start with Python

Programming is how you tell the computer exactly what to do.

For data engineering, the most useful language to start with is Python.

Later, you can also learn Java or Scala, especially if you work with big data tools like Spark. But Python is a very strong first step.

With Python, you can:

- Read data from files or APIs

- Clean and transform data

- Connect to databases

- Build simple data pipelines

As a beginner, your roadmap can be:

- Learn Python basics:

- Variables

- Loops

- Functions

- Lists and dictionaries

- Learn the Pandas library:

- Read data from a CSV

- Filter rows

- Group and summarise data

You do not need to be a “perfect coder”.

You just need clear thinking and regular practice on small problems.

6. Data Quality and Governance – Bad Data Means Bad Decisions

Even the best dashboard or model is useless if the underlying data is wrong.

Think about:

- A hospital where a patient’s age is recorded incorrectly

- A bank where a transaction amount is stored in the wrong format

Wrong data can lead to wrong decisions and sometimes serious problems.

That is why data quality and data governance are becoming very important skills.

As a data engineer, part of your job is to help ensure that:

- Data is clean (no obvious errors)

- Data is complete (important fields are not missing)

- Data is not duplicated

- Sensitive data (like ID numbers, card details, phone numbers) is protected

You may hear terms like “privacy”, “compliance”, or “GDPR”.

In simple terms, they all mean: handling user data in a safe and responsible way.

As a student, you can practice by:

- Checking sample datasets for:

- Missing values

- Wrong formats (for example, dates stored as plain text)

- Duplicate rows

- Reading about what personal data (PII) is and why it must be protected

If you talk about data quality and safety in an interview, you will sound more mature and responsible as a fresher.

7. Machine Learning Basics – Knowing How Your Data Is Used

Machine Learning (ML) is about using data to find patterns and make predictions.

As a data engineer, you are not required to build very advanced ML models.

But you should understand the basics so you can support data scientists and build good data pipelines for them.

You should know, at a high level:

- What a training dataset is

- The basic difference between:

- Classification (predicting categories like “spam / not spam”)

- Regression (predicting numbers like price or score)

- Clustering (grouping similar customers together)

- That ML models need clean, well-structured data

As a student, you can:

- Watch a few beginner-friendly videos on:

- “What is supervised vs unsupervised learning?”

- “What is a training and test dataset?”

- Focus on understanding the ideas, not the maths

Even this basic level of ML understanding helps you stand out as a data engineer who knows how their data will be used.

Conclusion

To get hired as a data engineer in 2026, you do not need to know every tool in the world.

You mainly need:

- SQL and databases – to talk to data

- Data modelling and ETL – to organise and move data

- Cloud and big data basics – to understand where data runs in modern systems

- Data warehousing and real-time concepts – to handle historical and live data

- Programming (Python) – to build pipelines and automate work

- Data quality and governance – to make sure data is correct and safe

- Machine learning basics – to understand how your data powers AI

Yes, it can look like a long list at first.

But you do not have to learn everything at once.

Start with one skill, do small practice projects, and grow step by step.

In 6–12 months of focused effort, you can build a strong profile as a beginner data engineer.

Want a clear roadmap for your data engineering journey? Click here to fill the IDEA form and get guidance on your next step.